Integrating Impedance Control and Nonlinear Disturbance Observer for Robot-Assisted Arthroscope Control in Elbow Arthroscopic Surgery

Teng Li, Armin Badre, Hamid D Taghirad, Mahdi Tavakoli

International Conference on Intelligent Robots and Systems (IROS) | Abstract: Robot-assisted arthroscopic surgery is transforming the tradition in orthopaedic surgery. Compliance and stability are essential features that a surgical robot must have for safe physical human-robot interaction ( P HRI). Surgical tools attached at the robot end-effector and human-robot interaction will affect the robot dynamics inevitably. This could undermine the utility and stability of the robotic system if the varying robot dynamics are not identified and updated in the robot control law. In this paper, an integrated frame-work for robot impedance control and nonlinear disturbance observer (NDOB)-based compensation of uncertain dynamics is proposed, where the former ensures compliant robot behavior and the latter compensates for dynamic uncertainties when necessary. The combination of impedance controller and NDOB is analyzed theoretically in three scenarios. A complete simulation and experimental studies … | 2022 | Conference | PDF |

An Observer-Based Responsive Variable Impedance Control for Dual-User Haptic Training System

A Rashvand, R Heidari, M Motaharifar, A Hassani, MR Dindarloo, MJ Ahmadi, K Hashtrudi-Zaad, M Tavakoli, HD Taghirad

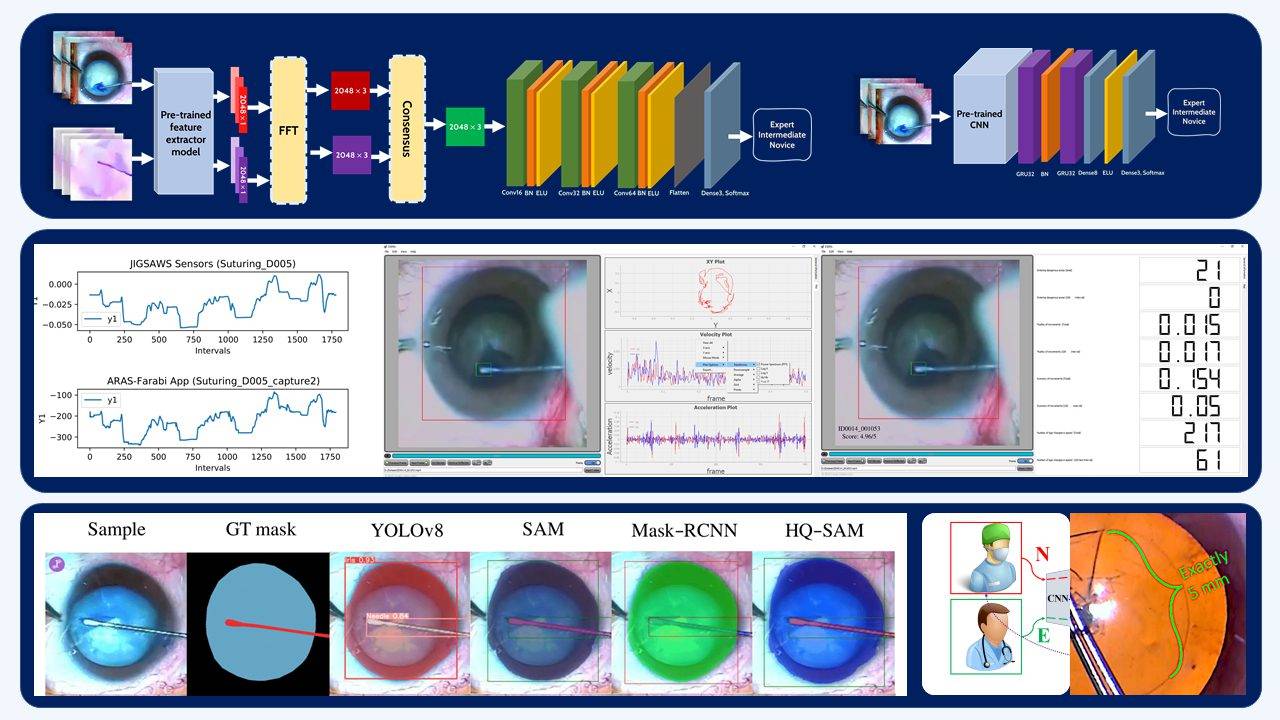

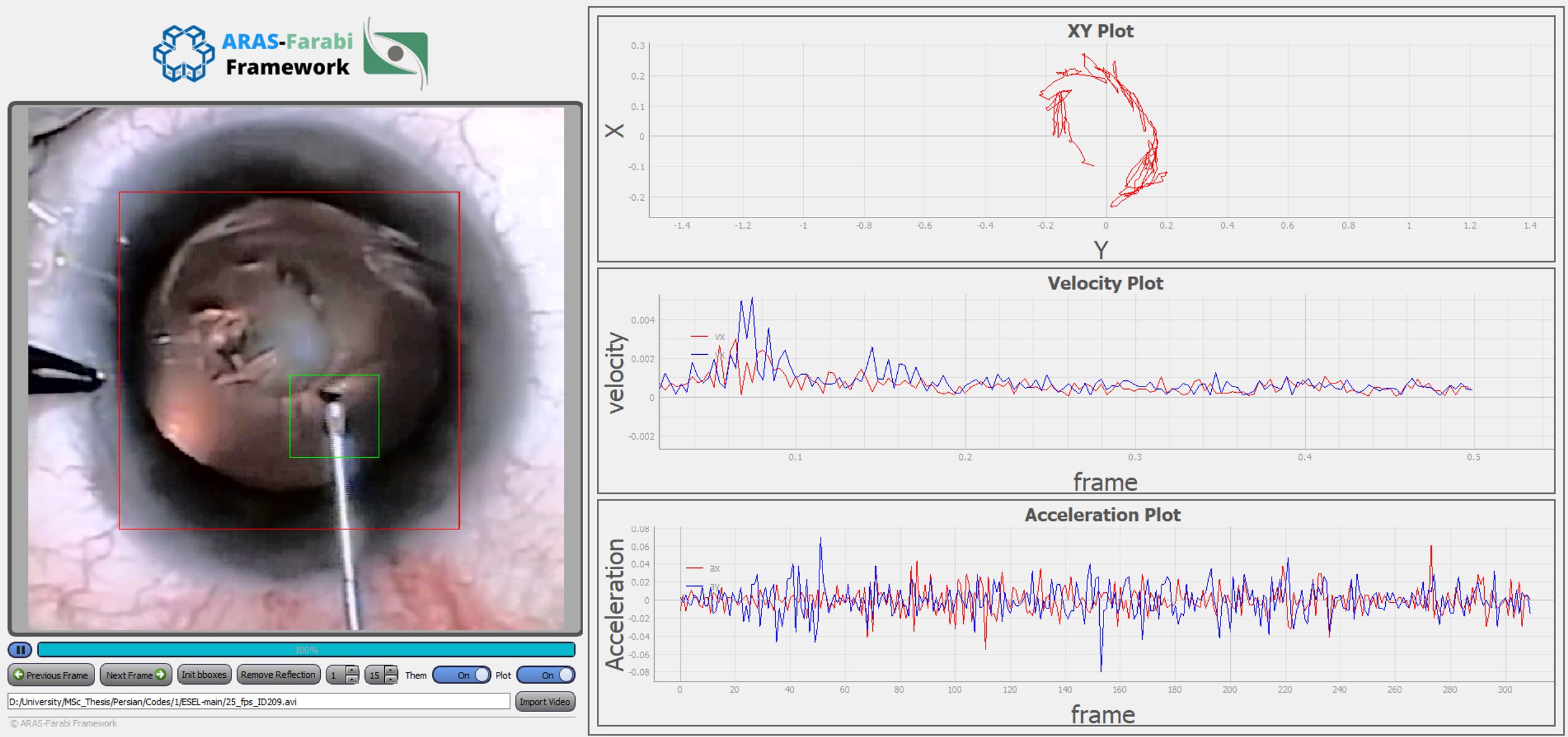

2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) | Abstract: This paper proposes a variable impedance control architecture to facilitate eye surgery training in a dual-user haptic system. In this system, an expert surgeon (the trainer) and a novice surgeon (the trainee) collaborate on a surgical procedure using their own haptic devices. The mechanical impedance parameters of the trainer's haptic device remain constant during the operation, whereas those of the trainee vary with his/her proficiency level. The trainee's relative proficiency might be objectively quantified in real-time based on position error between the trainer and the trainee. The proposed architecture enables the trainer to intervene in the training process as needed to ensure the trainee is following the right course of action and to avoid the trainee's from potential tissue injuries. The stability of the overall nonlinear closed-loop system has been investigated using the input-to-state stability (ISS) criterion. High-gain … | 2022 | Conference | PDF |

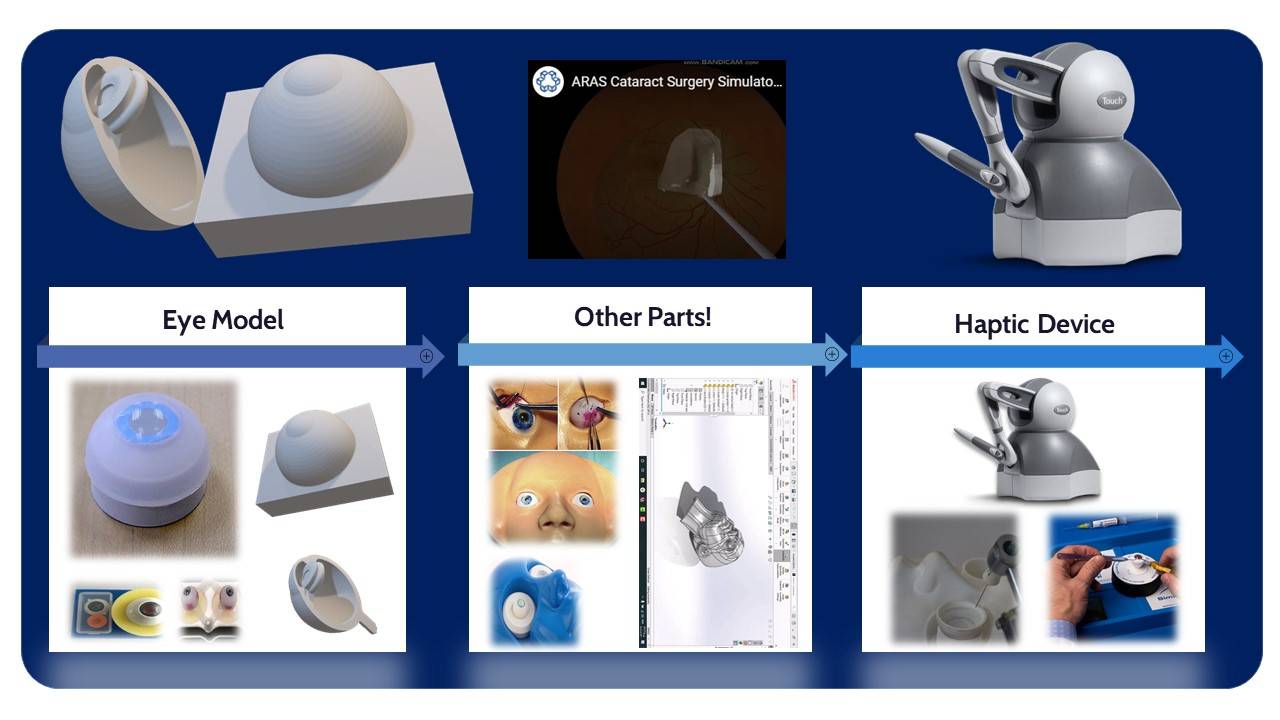

On The Dynamic Calibration and Trajectory Control of ARASH: ASiST

A Hassani, MR Dindarloo, R Khorrambakht, A Bataleblu, R Heidari, M Motaharifar, SF Mohammadi, HD Taghirad

2022 8th International Conference on Control, Instrumentation and Automation (ICCIA) | Abstract: This article investigates the dynamic parameter calibration of ARAS Haptic System for EYE Surgery Training (ARASH:ASiST). ARASH:ASiST is a 3-DOF haptic device developed for intraocular surgery training. In this paper, the linear regression form of the dynamic formulation of the system with respect to its dynamic parameters is derived. Then the dynamic parameters of ARASH:ASiST are calibrated using the least square (LS) identification scheme. The cross-validation results for different trajectories indicate that the identified model has a suitable approximate fitness percentage in both translational and rotational motions of the surgical instrument. Finally, a robust model-based controller is implemented on the real prototype by the use of the calibration outcome, and it is verified that by using the estimated dynamic model, the trajectory tracking performance is significantly improved and the tracking error is reduced … | 2022 | Conference | PDF |

Adaptive robust impedance control of haptic systems for skill transfer

Ashkan Rashvand, Mohammad Javad Ahmadi, Mohammad Motaharifar, Mahdi Tavakoli, Hamid D Taghirad

2021 9th RSI International Conference on Robotics and Mechatronics (ICRoM) | Abstract: This paper aims to develop the impedance control structure of a dual-user haptic training system for the application of surgical training. Through the proposed structure, the process of skill transfer from the trainer to the trainee is considered through automatic transformation of impedance coefficients, based on the evaluation of the trainee’s performance and its adaptation to the trainer’s behavior. The similarities between the position of the trainer and the trainee are examined in a time window to generate the base structure for varying impedance coefficients. In presence of modeling uncertainties, the proposed impedance controller is capable to enforce two reference impedance dynamics for the trainer and the trainee. In the proposed control structure, a high-gain observer is used to satisfy force demands without requiring expensive sensors. The input-to-state stability (ISS) of the dual-user haptic training system is … | 2021 | Conference | PDF |

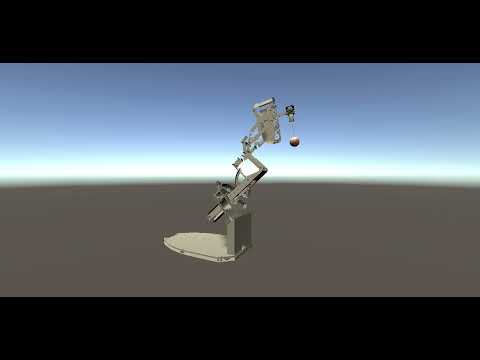

Robust -based control of ARAS-diamond: A vitrectomy eye surgery robot

Abbas Bataleblu, Rohollah Khorrambakht, Hamid D Taghirad

Proceedings of the Institution of Mechanical Engineers, Part C: Journal of Mechanical Engineering Science | Abstract: In this paper, we investigate the challenges of controlling the ARAS-Diamond robot for robotic-assisted eye surgery. To eliminate the system’s inherent uncertainty effects on its performance, a cascade architecture control structure is proposed in this paper. For the inner loop of this structure, two different robust controls, namely, and μ-synthesis, with stability and performance analysis, are synthesized. The outer loop of the structure, on the other hand, controls the orientation of the surgical instrument using a well-tuned PD controller. The stability of the system as a whole, considering both inner and outer loop controllers, is analyzed in detail. Furthermore, implementation results on the real robot are presented to illustrate the effectiveness of the proposed control structure compared to that of conventional controller designs in the presence of inherent uncertainties of the system and external disturbances, and it is … | 2021 | Journal | PDF |

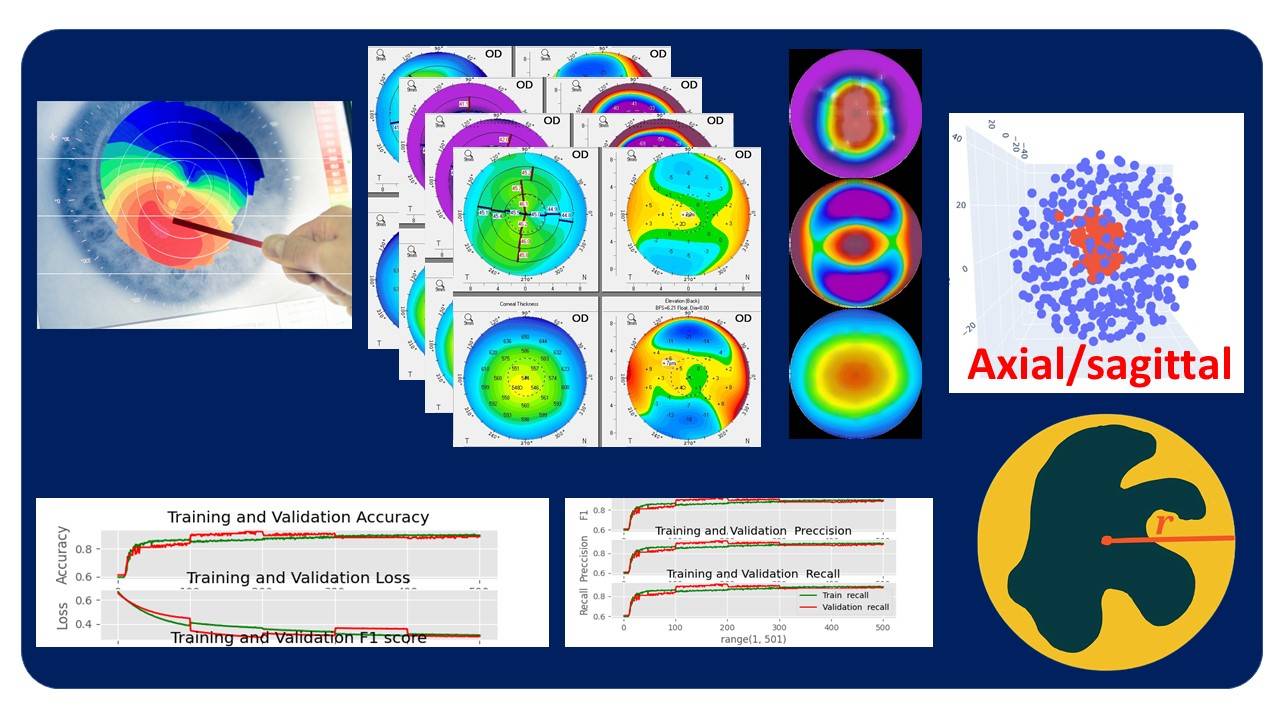

Applications of Haptic Technology, Virtual Reality, and Artificial Intelligence in Medical Training During the COVID-19 Pandemic

Mohammad Motaharifar, Alireza Norouzzadeh, Parisa Abdi, Arash Iranfar, Faraz Lotfi, Behzad Moshiri, Alireza Lashay, Seyed Farzad Mohammadi, Hamid D Taghirad

Frontiers in Robotics and AI, 258 | Abstract: This paper examines how haptic technology, virtual reality, and artificial intelligence reduce the physical contact in medical training during the COVID-19 Pandemic. Notably, any mistake made by the trainees during the education stages might lead to undesired complications for the patient. Therefore, training of the medical skills to the trainees have always been a challenging issue for the expert surgeons, and this is even more challenging in pandemics. The current method of surgery training needs the novice surgeons to attend some courses, watch some procedure, and conduct their initial operations under the direct supervision of an expert surgeon. Owing to the requirement of physical contact in this method of medical training, the involved people including the novice and expert surgeons confront a potential risk of infection to the virus. This survey paper reviews novel recent breakthroughs along with new areas in which assistive technologies might provide a viable solution to reduce the physical contact in the medical institutes during the COVID-19 pandemic and similar crises. | 2021 | Journal | PDF |

Kinematic and Dynamic Analysis of ARASH ASiST: Toward Micro Positioning

A Hassani, MR Dindarloo, R Khorambakht, A Bataleblu, H Sadeghi, R Heidari, A Iranfar, P Hasani, NS Hojati, A Khorasani, N KhajeAhmadi, M Motaharifar, H Riazi-Esfahani, A Lashay, SF Mohammadi, HD Taghirad

2021 9th RSI International Conference on Robotics and Mechatronics (ICRoM) | Abstract: This article elaborate on the kinematic and dynamic analysis of ARASH:ASiST, "ARAS Haptic System for Eye Surgery Training", which is developed for vitrectomy eye surgery training. The mechanism selection of this system is reviewed first, in order to assist such a precise intraocular eye surgery training. Then the kinematics and dynamics analysis of the proposed haptic system is investigated. To verify the reported result, a prototype of ARASH:ASiST is modeled in MSC-ADAMS ® , and the results of the dynamic formulation are validated. Finally, a common model-based controller is implemented on the real prototype, and it is verified that with such controller a suitable accuracy of 200 μm is attainable for the surgical instrument. | 2021 | Conference | PDF |

Adaptive Robust Impedance Control of Haptic Systems for Skill Transfer

Ashkan Rashvand, Mohammad Javad Ahmadi, Mohammad Motaharifar, Mahdi Tavakoli, Hamid D Taghirad

2021 9th RSI International Conference on Robotics and Mechatronics (ICRoM) | Abstract: Designing control systems with bounded input is a practical consideration since realizable physical systems are limited by the saturation of actuators. The actuators' saturation degrades the performance of the control system, and in extreme cases, the stability of the closed-loop system may be lost. However, actuator saturation is typically neglected in the design of control systems, with compensation being made in the form of over-designing the actuator or by post-analyzing the resulting system to ensure acceptable performance.. | 2021 | Conference | PDF |

A Review on Applications of Haptic Systems, Virtual Reality, and Artificial Intelligence in Medical Training in COVID-19 Pandemic

R Heidari, M Motaharifar, H Taghirad, SF Mohammadi, A Lashay

Journal of Control | Abstract: This paper presents a survey on haptic technology, virtual reality, and artificial intelligence applications in medical training during the COVID-19 pandemic. Over the last few decades, there has been a great deal of interest in using new technologies to establish capable approaches for medical training purposes. These methods are intended to minimize surgerychr('39')s adverse effects, mostly when done by an inexperienced surgeon | 2021 | Journal | PDF |

Closed-form Inverse kinematics Equations of a Robotic Finger Mechanism

Mohammad Sina Allahkaram, Mohammad Javad Ahmadi, Hamid D Taghirad

2021 9th RSI International Conference on Robotics and Mechatronics (ICRoM) | Abstract: Deriving the explicit form of direct and inverse kinematics equations is challenging in kinematics analysis of serial manipulators. In explicit forms the exact solutions of the inverse problem may be obtained with the required accuracy. Furthermore, in the controller topologies where inverse kinematics is needed, it is much preferable to have the explicit form, because of its easier real time implementation. The biomimic robotic hands has a complex structures, consisting of a number of cooperating serial kinematic chain. In this paper, a method for obtaining the explicit form of the inverse kinematics equations of a major group of robotic finger structures is proposed by using spatial analysis. By this means, the inverse kinematic of this class of mechanisms is derived in explicit form. To investigate the effectiveness of the proposed method an open-source model of such mechanisms named InMoov is used, and the accuracy … | 2021 | Conference | PDF |

Dynamic modeling and identification of aras-diamond: A vitreoretinal eye surgery robot

Ali Hassani, Abbas Bataleblu, Seyed Ahmad Khalilpour, Hamid D Taghirad

Modares Mechanical Engineering 21 (11), 783-795 | Abstract: Original Research Deriving the accurate dynamic model of robots is pivotal for robot design, control, calibration, and fault detection. To derive an accurate dynamic model of robots, all the terms affecting the robot's dynamics are necessary to be considered, and the dynamic parameters of the robot must be identified with appropriate physical insight. In this paper, first, the kinematics of the ARAS-Diamond spherical parallel robot, which has been developed for vitreoretinal ophthalmic surgery, are investigated, then by presenting a formulation based on the principle of virtual work, a linear form of robot dynamics is derived, and the obtained results are validated in SimMechanics environment. Furthermore, other terms affecting the robot dynamics are modeled, and by using the linear regression form of the robot dynamics with the required physical bounds on the parameters, the identification process is accomplished adopting the least-squares method with appropriate physical consistency. Finally, by using the criteria of the normalized root mean squared error (NRMSE) and using different trajectories, the accuracy of the identified dynamic parameters is evaluated. The experimental validation results demonstrate a good fitness for the actuator torques (about 75 percent), and a positive mass matrix in the entire workspace, which allows us to design the common model-based controllers such as the computer torque method, for precise control of the robot in vitreoretinal ophthalmic surgery. | 2021 | Journal | PDF |

A force reflection robust control scheme with online authority

adjustment for dual user haptic system

Mohammad Motaharifar, Hamid D. Taghirad

Mechanical Systems and Signal Processing | Abstract: This article aims at developing a control structure with online authority adjustment for a

dual-user haptic training system. In the considered system, the trainer and the trainee

are given the facility to cooperatively conduct the surgical operation. The task dominance

is automatically adjusted based on the task performance of the trainee with respect to the trainer. To that effect, the average norm of position error between the trainer and the trainee is calculated in a sliding window and the relative task dominance is assigned to the operators accordingly. Moreover, a robust controller is developed to satisfy the require-

ment of position tracking. The stability analysis based on the input-to-state stability (ISS) methodology is reported. Experimental results demonstrate the effectiveness of the

proposed control approach. | 2020 | Journal | PDF |

A Robust Controller with Online Authority Transformation for Dual User Haptic Training System

M Motaharifar, H Taghirad, SF Mohammadi

Journal of Control | Abstract: The design problem for the control a dual-user haptic surgical training system is studied in this article. The system allows the trainee to perform the task on a virtual environment, while the trainer is able to interfere in the operation and correct probable mistakes made by the trainee. The proposed methodology allows the trainer to transfer the task authority to or from the trainee in real time | 2020 | Journal | PDF |

Control Synthesis and ISS Stability Analysis of Dual-User Haptic Training System Based on S-Shaped Function

Mohammad Motaharifar, Hamid D. Taghirad, Keyvan Hashtrudi-Zaad, Seyed Farzad Mohammadi

IEEE/ASME Transactions on Mechatronics | Abstract: The controller design and stability analysis of dual user training haptic system is studied. Most of the previously proposed control methodologies for this system have not simultaneously considered special requirements of surgery training and stability analysis of the nonlinear closed loop system which is the objective of this paper. In the proposed training approach, the trainee is allowed to freely experience the task and be corrected as needed, while the trainer maintains the task dominance. A special S-shaped function is suggested to generate the corrective force according to the magnitude of motion error between the trainer and the trainee. The closed loop stability of the system is analyzed considering the nonlinearity of the system components using the Input-to-State Stability (ISS) approach. Simulation and experimental results show the effectiveness of the proposed approach. | 2019 | Conference | PDF |

Control Synthesis and ISS Stability A5Analysis of Dual-User Haptic Training System Based on S-Shaped Function

Mohammad Motaharifar, Hamid D. Taghirad, Keyvan Hashtrudi-Zaad and Seyed Farzad Mohammadi

IEEE/ASME Transactions on Mechatronics | Abstract: The controller design and stability analysis of dual user training haptic system is studied. Most of the previously proposed control methodologies for this system have not simultaneously considered special requirements of surgery training and stability analysis of the nonlinear closed loop system which is the objective of this paper. In the proposed training approach, the trainee is allowed to freely experience the task and be corrected as needed, while the trainer maintains the task dominance. A special S-shaped function is suggested to generate the corrective force according to the magnitude of motion error between the trainer and the trainee. The closed loop stability of the system is analyzed considering the nonlinearity of the system components using the Input-to-State Stability (ISS) approach. Simulation and experimental results show the effectiveness of the proposed approach. | 2019 | Journal | PDF |

Control Synthesis and ISS Stability Analysis of a Dual-User Haptic Training System Based on S-Shaped Function

Mohammad Motaharifar, Hamid D. Taghirad, Keyvan Hashtrudi-Zaad, and Seyed Farzad Mohammadi

IEEE/ASME Transactions on Mechatronics | Abstract: The controller design and stability analysis of

a dual user training haptic system is studied. Most of the previously proposed control methodologies for this system

have not simultaneously considered special requirements

of surgery training and stability analysis of the nonlinear

closed-loop system which is the objective of this paper. In the proposed training approach, the trainee is allowed

to freely experience the task and be corrected as needed,

while the trainer maintains the task dominance. A special S-shaped function is suggested to generate the corrective force according to the magnitude of motion error between the trainer and the trainee. The closed-loop stability of the system is analyzed considering the nonlinearity of

the system components using the Input-to-State Stability approach. Simulation and experimental results show the effectiveness of the proposed approach. | 2019 | Journal | PDF |

Control of Dual-User Haptic Training System With Online Authority Adjustment: An Observer-Based Adaptive Robust Scheme

Mohammad Motaharifar, Hamid D. Taghirad, Keyvan Hashtrudi-Zaad and Seyed Farzad Mohammadi

IEEE Transactions on Control Systems Technology | Abstract: The design problem for the control a dual-user

haptic surgical training system is studied in this article. The

system allows the trainee to perform the task on a virtual environment, while the trainer is able to interfere in the operation and correct probable mistakes made by the trainee. The proposed

methodology allows the trainer to transfer the task authority to or from the trainee in real time. The robust adaptive nature

of the controller ensures position tracking. The stability of the closed-loop system is analyzed using the input-to-output stability approach and the small-gain theorem. Simulation and

experimental results are presented to validate the effectiveness

of the proposed control scheme. | 2019 | Journal | PDF |

A Force Reflection Impedance Control Scheme for Dual User Haptic Training System

M. Motaharifar, A. Iranfar, and H. D. Taghirad 2019 27th Iranian Conference on Electrical Engineering (ICEE) | Abstract: In this paper, an impedance control based training scheme for a dual user haptic surgery training system is introduced. The training scheme allows the novice surgeon (trainee) to perform a surgery operation while an expert surgeon (trainer) supervises the procedure. Through the proposed impedance control structure, the trainer receives trainee’s position to detect his (her) wrong movements. Besides, a novel force reflection term is proposed in order to efficiently utilize trainer’s skill in the training loop. Indeed, the trainer can interfere into the procedure whenever needed either to guide the trainee or suppress his (her) authority due to his (her) supposedly lack of skill to continue the operation. Each haptic device is stabilized and the closed loop stability of the nonlinear system is investigated. Simulation results show the appropriate performance of the proposed control scheme. | 2019 | Conference | PDF |

Robust Impedance Control for Dual User Haptic Training System

R. Heidari, M. Motaharifar and H. D. Taghirad

International Conference on Robotics and Mechatronics | In this paper, an impedance controller with switching parameters for a dual-user haptic training system is introduced. The trainer and the trainee are connected through their

haptic consoles, and the trainee performs the surgical procedure

on the environment. The trainer can intervene in the procedure

by pressing a mechanical pedal; thus, the control parameters are

switched to transfer the authority over the task from the trainee

to the trainer. The stability of each subsystem and the closed-loop

stability of the overall system are investigated. The simulation

results verify the performance of the proposed controller. | 2019 | Conference | PDF |

A Dual-User Teleoperated Surgery Training Scheme Based on Virtual Fixture

A. Iranfar, M. Motaharifar, and H. D. Taghirad

2018 6th RSI International Conference on Robotics and Mechatronics (IcRoM) | Abstract: The widespread use of minimally invasive surgery (MIS) demands an appropriate framework to train novice surgeons (trainees) to perform MIS. One of the effective ways to establish a cooperative training system is to use virtual fixtures. In this paper, a guiding virtual fixture is proposed to correct the movements of the trainee according to trainer hand motion performing a real MIS surgery. The proposed training framework utilizes the position signals of trainer to modify incorrect movements of the trainee which leads to shaping the trainee's muscle memory. Thus, after enough training sessions the trainee gains sufficient experience to perform the surgical task without any further help from the trainer. The passivity approach is utilized to analyze the stability of system. Simulation results are also presented to demonstrate the effectiveness of the proposed method. | 2018 | Conference | PDF |

Robust control of a non-minimum phase system in presence of actuator saturation

Y Salehi, MA Sheikhi, M Motaharifar, HD Taghirad

2017 5th International Conference on Control, Instrumentation, and Automation (ICCIA)

| Abstract: In this paper robust controller synthesis for a nonminimum phase (NMP) system in presence of actuator saturation is elaborated. The nonlinear model of a system is encapsulated with a nominal model and multiplicative uncertainties. Two robust control approaches namely mixed sensitivity H ∞ and μ-synthesis are compared from the robust stability and robust performance points of views. Finally, through simulation results it is demonstrated that both the robust controller approaches have superior performance compared to that of a conventional PID controller, while H ∞ controller performs best.

| 2017 | Conference | PDF |

An Observer-Based Force Reflection Robust Control for Dual User Haptic Surgical Training System

M. Motaharifar and H. D. Taghirad

2017 5th RSI International Conference on Robotics and Mechatronics (ICRoM) | Abstract: This paper investigates the controller design problem for the dual user haptic surgical training system. In this system, the trainer and the trainee are interfaced together through their haptic devices and the surgical operations on the virtual environment is performed by the trainee. The trainer is able to interfere into the procedure in the case that any mistakes is made by the trainee. In the proposed structure, the force of the trainer's hands is reflected to the hands of the trainee to give necessary guidance to the trainee. The force signal is obtained from an unknown input high gain observer. The position of the trainee and the contact force with the environment are sent to the trainer to give him necessary information regarding the status of surgical operations. Stabilizing control laws are also designed for each haptic device and the stability of the closed loop nonlinear system is proven. Simulation results are presented to show the effectiveness of the proposed controller synthesis. | 2017 | Conference | PDF |

Adaptive Control of Dual User Teleoperation with Time Delay and Dynamic Uncertainty

M. Motaharifar, A. Bataleblu, and H. D. Taghirad

2016 24th Iranian Conference on Electrical Engineering (ICEE) | Abstract: This technical note aims at proposing an adaptive control scheme for dual-master trilateral teleoperation in the presence of communication delay and dynamic uncertainty in the parameters. The majority of existing control schemes for trilateral teleoperation systems have been developed for linear systems or nonlinear systems without dynamic uncertainty or time delay. However, in the practical teleoperation applications, the dynamics equations are nonlinear and contain uncertain parameters. In addition, the time delay in the communication channel mostly exists in the real applications and can affect the stability of closed loop system. As a result, an adaptive control methodology is proposed in this paper that to guarantee the stability and performance of the system despite nonlinearity, dynamic uncertainties and time delay. Simulation results are presented to show the effectiveness of the proposed adaptive controller methodology. | 2016 | Conference | PDF |

Robust H-Infinity Control of a 2RT Parallel Robot For Eye Surgery

Abbas Bataleblu, Mohammad Motaharifar, Ebrahim Abedlu, Hamid D. Taghirad

2016 4th International Conference on Robotics and Mechatronics (ICROM) | Abstract: This paper aims at designing a robust controller for a 2RT parallel robot for eye telesurgery. It presents two robust controllers designs and their performance in presence of actuator saturation limits. The nonlinear model of the robot is encapsulated with a linear model and multiplicative uncertainty using linear fractional transformations (LFT). Two different robust control namely, H ? and ?-synthesis are used and implemented. Results reveal that the controllers are capable to stabilize the closed loop system and to reduce the tracking error in the presence of the actuators saturation. Simulation results are presented to show that effectiveness of the controllers compared to that of conventional controller designs. Furthermore, it is observed that ?-synthesis controller has superior robust performance. | 2016 | Conference | PDF |

Particle Filters for Non-Gaussian Hunt-Crossley Model of Environment in Bilateral Teleoperation

Pedram Agand, Hamid D. Taghirad and Ali Khaki-Sedigh

2016 4th International Conference on Robotics and Mechatronics (ICROM) | Abstract: Optimal solution for nonlinear identification problem in the presence of non-Gaussian distribution measurement and process noises is generally not analytically tractable. Particle filters, known as sequential Monte Carlo method (SMC), is a suboptimal solution of recursive Bayesian approach which can provide robust unbiased estimation of nonlinear non-Gaussian problem with desire precision. On the other hand, Hunt-Crossley is a widespread nonlinear model for modeling telesurgeries environment. Hence, in this paper, particle filter is proposed to capture most of the nonlinearities in telesergerie environment model. An online Bayesian framework with conventional Monte Carlo method is employed to filter and predict position and force signals of environment at slave side respectively to achieve transparent and stable bilateral teleoperation simultaneously. Simulation results illustrate effectiveness of the algorithm by comparing the estimation and tracking errors of sampling importance resampling (SIR) with extended Kalman filter. | 2016 | Conference | PDF |

Adaptive Control for Force-Reflecting Dual User Teleoperation Systems

Sara Abkhofte, Mohammad Motaharifar, and Hamid D. Taghirad

2016 4th International Conference on Robotics and Mechatronics (ICROM) | Abstract: The aim of this paper is to develop an adaptive force reflection control scheme for dual master nonlinear teleoperation systems. Having a sense of contact forces is very important in applications of dual master teleopreation systems such as surgery training. However, most of the previous studies for dual master nonlinear teleoperation systems are limited in the stability analysis of force reflection control schemes. In this paper, it is assumed that the teleopreation system consists of two masters and a single slave manipulator. In addition, all communication channels are subject to unknown time delays. First, adaptive controllers are developed for each manipulator. Next, Input-to-State Stability (ISS) approach is used to analyze the stability of the closed loop system. Through simulation results, it is demonstrated that the proposed methodology is effective in a nonlinear teleopreation system. | 2016 | Conference | PDF |

An Observer-Based Adaptive Impedance-Control for Robotic Arms: Case Study in SMOS Robot

Soheil Gholami, Arash Arjmandi, and Hamid D. Taghirad

2016 4th International Conference on Robotics and Mechatronics (ICROM) | Abstract: In this paper an adaptive output-feedback impedance control is proposed to be used in environment-machine interaction applications. The proposed control is designed to achieve a desired robot impedance in the presence of possible dynamical parameter uncertainties. A high-gain observer is utilized in the control structure to achieve this objective by using only position feedback of robot joints, which in turn, reduces implementation costs and eliminates additional sensor requirements. Stability of the overall system is analyzed through input to state stability analysis. Finally, to evaluate the presented structure, computer simulations are provided and the scheme effectiveness is verified. | 2016 | Conference | PDF |

Kinematic and Workspace Analysis of DIAMOND: An Innovative Eye Surgery Robot

Amir Molaei, Ebrahim Abedloo, Hamid D. Taghirad and Zahra Marvi

2015 23rd Iranian Conference on Electrical Engineering | Abstract: This paper presents a new robot for eye surgeries, referred to as DIAMOND. It consists of a spherical mechanism that has a remote center of motion (RCM) point and is capable of orienting the surgical instrument about this unique point. Using the RCM as the insertion point of the surgery instruments makes the robot suitable for minimally invasive surgery applications. DIAMOND has two pairs of identical spherical serial limbs that form a closed kinematic chain leading to high stiffness. The spherical structure of the mechanism is compatible with the human head and the robot may perform the surgery upon the head without any collision with the patient. Furthermore, dexterity and having a compact size is taken into account in the mechanical design of the robot. The workspace of the robot is a complete singularity free sphere that covers the region needed for any eye surgeries. In this paper, a comparison between different types of existing eye surgery robots is presented, the structure of the mechanism is described in detail and kinematic analysis of the robot is investigated. | 2015 | Conference | PDF |

Eye-RHAS Manipulator: From Kinematics to Trajectory Control

Ebrahim Abedloo, Soheil Gholami, and Hamid D. Taghirad

2015 3rd RSI International Conference on Robotics and Mechatronics (ICROM) | Abstract: One of the challenging issues in the robotic technology is to use robotics arm for surgeries, especially in eye operations. Among the recently developed mechanisms for this purpose, there exists a robot, called Eye-RHAS, that presents sustainable precision in vitreo-retinal eye surgeries. In this work the closed-form dynamical model of this robot has been derived by Gibbs-Appell method. Furthermore, this formulation is verified through SimMechanics Toolbox of MATLAB. Finally, the robot is simulated in a real time trajectory control in a teleoperation scheme. The tracking errors show the effectiveness and applicability of the dynamic formulation to be used in the teleoperation schemes. | 2015 | Conference | PDF |

Vision-Based Kinematic Calibration of Spherical Robots

Pedram Agand, Hamid D. Taghirad and Amir Molaee

2015 3rd RSI International Conference on Robotics and Mechatronics (ICROM) | Abstract: In this article, a method to obtain spatial coordinate of spherical robot's moving platform using a single camera is proposed, and experimentally verified. The proposed method is an accurate, flexible and low-cost tool for the kinematic calibration of spherical-workspace mechanisms to achieve the desired accuracy in position. The sensitivity and efficiency of the provided method is thus evaluated. Furthermore, optimization of camera location is outlined subject to the prescribed cost functions. Finally, experimental analysis of the proposed calibration method on ARAS Eye surgery Robot (DIAMOND) is presented; In which the accuracy is obtained from three to six times better than the previous calibration. | 2015 | Conference | PDF |

Sliding Impedance Control for Improving Transparency in Telesurgery

Samim Khosravi, Arash Arjmandi and Hamid D. Taghirad

2014 Second RSI/ISM International Conference on Robotics and Mechatronics (ICRoM) | Abstract: This paper describes a novel control scheme for teleoperation with constant communication time delay and soft tissue in environment of slave side. This control scheme combine fidelity criteria with sliding impedance. Fidelity is a measure for evaluating telesurgical system when environment at slave side contains soft tissues. Sliding impedance is used to stabilize the teleoperating system with constant time delays and improve tracking performance in the presence of uncertainties in slave dynamics. The control system contains impedance and sliding impedance control in master and slave manipulators, respectively. Parameters of sliding impedance controller are obtained from fidelity optimization problem while parameters of master impedance controller are determined such that to guarantee stability of the entire teleopertaion system. Simulation results demonstrate suitable performance of position and force tracking of the telesurgical system. | 2014 | Conference | PDF |

Analytical Passivity Analysis for Wave-based Teleoperation with Improved Trajectory Tracking

Bita Fallahi, Hamid D. Taghirad

Canadian Committee for the Theory of Machines and Mechanisms | Abstract: In wave based teleoperation, although passivity is ensured for any time delay, tracking performance is usually distorted due to the bias term introduced by wave transmission. To improve the position tracking error, one way is to augment the forward wave with a corrective term and achieve pas-sivity by tuning the band width of a low pass filter in the forward path. However, this filter fails to meet the passivity condition in contact to stiff environments, especially at steady state. In this paper a new method is proposed and an analytical solution for passivity at steady state and a semi analytical solution for all other frequencies are represented. This method significantly reduces the complexity of the closed-loop system, ensures passivity in contact to the stiff environments, and improves trajectory tracking. Simulation results are presented to show the effectiveness of the pro-posed method. | 2011 | Conference | PDF |

Force Control of Intelligent Laparoscopic Forceps

Soheil Kianzad, Soheil O. Karkouti, and Hamid D. Taghirad

Journal of Medical Imaging and Health Informatics | Abstract: Actuators play an important role at the end-effectors of Minimally Invasive Surgery (MIS) robots. Having local, lightweight and powerful actuators would increase dexterity of surgeons. Shape Memory Alloy (SMA) actuators are considered as good candidates and presented significant behaviors in producing the force needed for grasping. Most of the current MIS systems provide surgeons with visual feedback. However, in many operations this information could not help surgeons to diagnose the manipulated tissue accurately. Therefore, having force and tactile information is also necessary. In order to have this information, local sensors are needed to give force feedback. This would also help to have control over the wire tension and prevent exceeding force causing tissue damages. In this paper a novel design of forceps that uses antagonistic SMA actuators is presented. This configuration helps to increase force and speed and also eliminates the bias spring used in similar works. Moreover, this antagonistic design makes it possible to place the force sensors at the back part of the forceps instead of attaching them to the jaws which results in a smaller forceps design. To control the exerted force, analytical model of system and a force control method are also presented. This enhanced design seems to address some of the existing shortcomings of similar models and remove them effectively. | 2011 | Journal | PDF |

Identification and Robust H-Infinity Control of the Rotational/Translational Actuator System

Mahdi Tavakoli, Hamid D. Taghirad, and Mehdi Abrishamchian

International Journal of Control Automation and Systems | Abstract: The Rotational/Translational Actuator (RTAC) benchmark problem considers a fourth-order dynamical system involving the nonlinear interaction of a translational oscillator and an eccentric rotational proof mass. This problem has been posed to investigate the utility of a rotational actuator for stabilizing translational motion. In order to experimentally implement any of the model-based controllers proposed in the literature, the values of model parameters are required which are generally difficult to determine rigorously. In this paper, an approach to the least-squares estimation of the parameters of a system is formulated and practically applied to the RTAC system. On the other hand, this paper shows how to model a nonlinear system as a linear uncertain system via nonparametric system identification, in order to provide the information required for linear robust H-Infinity control design. This method is also applied to the RTAC system, which demonstrates severe nonlinearities due to the coupling from the rotational motion to the translational motion. Experimental results confirm that this approach can effectively condense the whole nonlinearities, uncertainties, and disturbances within the system into a favorable perturbation block. | 2005 | Journal | PDF |