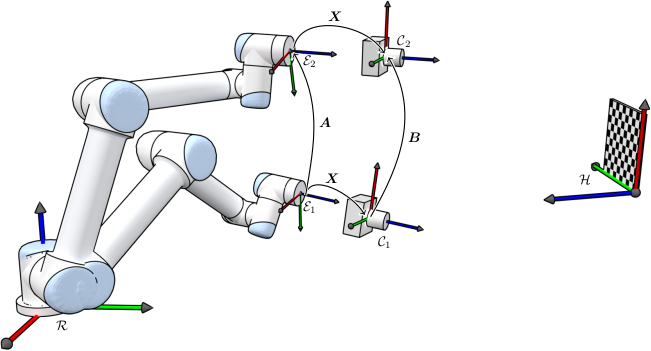

Estimation of the position and orientation of a rigid object from vision is still a challenging issue in robotics and computer vision. In robotics, it plays a vital role in visual servoing systems specially position based-eye in hand visual servoing. Input parameters of a pose estimator consist of a set of 3D coordinates of some feature points attached to the target, denoted in the object frame as well as their 2D projection in the image plane. At least six nonlinear independent equations are required in order to determine the 6 DOF relative pose between the camera and the object. This set of equations can be solved using at least 3 non-aligned feature points on the object and employing their geometric equations between the camera frame and the object frame.

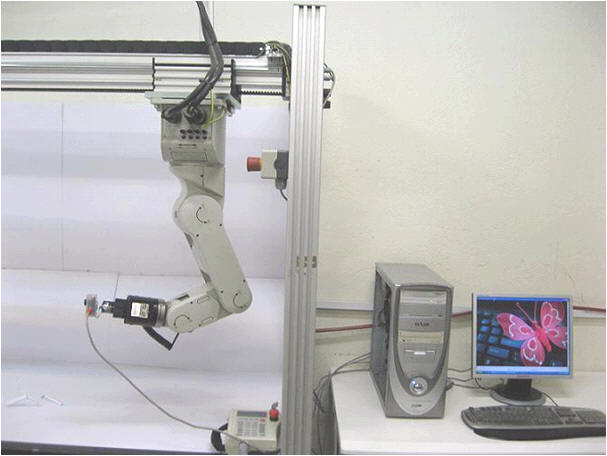

Eye-in-Hand Visual Servoing Setup

In general, there are two categories in order to solve the pose estimation problem which we classified as numerical techniques and predictive methods. The classic-numerical approaches use nonlinear optimization techniques, which typically formulate the pose calculation problem as a nonlinear least-squares problem. However, these methods require an appropriate initial guess to converge to an accurate solution. Additionally, there is no guarantee in support of their convergence or asymptotically convergence. Furthermore, they may cause an enormous computational cost, which is not bearable in real-time pose estimation scenarios. In contrast to the classic-numerical method, Chien-Ping Lu and G. D. Hager introduce an almost high-speed numerical technique that can converge to the goal, despite the inaccuracy of the initial guess. The serious problem of the numerical methods is their high sensitivity to the feature extraction error, noise, and disturbance, which may cause insufficient pose determination. Another alternative to deal with pose estimation issue is the predictive methods, which have been used to estimate the target position in noisy and uncertain structures. Extended Kalman Filter (EKF) is the most celebrated category of these approaches. EKF has the ability of noise, disturbance as well as uncertainty rejection. Furthermore, it can certainly reinforce the accuracy and speed of the estimation process which is vital in online applications.

The visual robotics group started its research by equipment of one of our industrial robotic manipulator to a visual camera, in order to automatically track dynamical objects within the workspace of the robot. This mission was fully accomplished on our Mitsubishi 5DoF industrial robot, with a eye-in-hand configuration. The first algorithm developed to target moving objects was the use of EKF algorithm on the moving objects with marked features. Then the research group focuses on the development of tracking featureless objects, especially kernel-based visual servoing methods. In order to effectively compute the visual kernel of the pictures, Fourier transformation and Log-polar transformation are being used. Then we introduce a sliding mode controller design in kernel-based visual servoing.

The main goal is to track a target object without any guiding features like lines, points, etc. In the kernel-based approach, a sum of the weighted image signal, or Fourier transform of the image signal, is used as a measurement for tracking purposes which is known as kernel measurement. Tracking error is the difference between current and desired kernel measurement and it is used as input variables to an integral sliding mode controller. By binding kernel-measurement to sliding mode control, our configured system will outperform conventional kernel-based visual servoing system. The proposed method is implemented on the Mitsubishi industrial robot and is compared with a conventional kernel-based visual servoing approach for different initial conditions. Furthermore, the stability of the proposed algorithm is analyzed via the Lyapunov theory. The uncertainties such as image noise, image blur, and camera calibration errors can affect the stability of the algorithm and will lead the target object partially or totally leave the image range, which derives a task failure. To reduce the effect of bounded uncertainties, the controller parameters are designed automatically based on sliding conditions.

The other venue being examined in this research theme was the use of monocular and stereo vision on the perception of the environment on a mobile robotic platform. This research was in collaboration with our Autonomous Robotics group which is currently being pursued.

Our Collaborators with this research group include Prof. Farrokh Janabi-Sharifi, Ryerson University, Canada.

Javad Ramezanzadeh, Fatemeh Bakhshandeh, Mahsa Parsapour, Parisa Masnadi, Aida Farahani, Seyed Farokh Atashzar, Mahya Shahbazi, Sahar Sedaghati, Homa Ammari, , Mehrnaz Salehian, Soheil Rayatdoost, Mohammad Reza Sadeghi. Farzaneh Sedaghat.