Abstract:

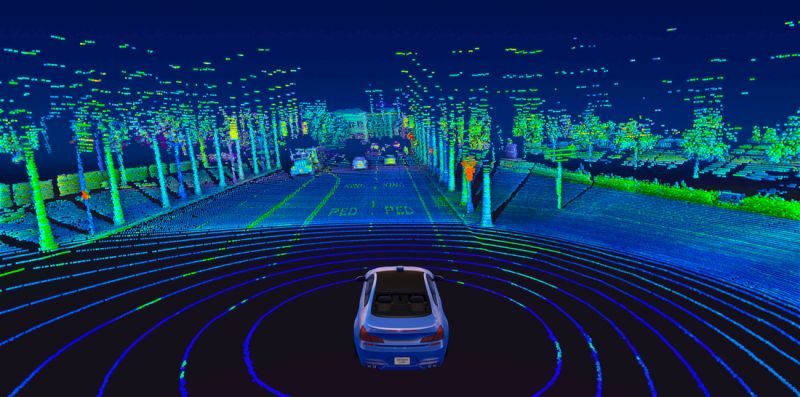

Cable and parallel robotics have been gaining more attention among researchers due to their unique characteristics and applications. Simple structure, high payload capacity, agile movements, and deployable structures are the main characteristics that nominate cable-robots from the other types of manipulators for many applications such as imaging, cranes, agriculture, etc. Interdisciplinary research fields such as dynamic analysis and control synthesis of parallel and cable-driven manipulators by using modern and intelligent approaches will be given further consideration in this presentation.

Kamalolmol® robot is a representative of cable-driven robots developed in ARAS research group, which is a fast deployable edutainment cable-driven robot for calligraphy and painting (chiaroscuro) applications. Additionally, ARAS research exploits the simplicity of cable robots with graph-based optimization and perception algorithms to create commercial inspection and imaging tools for various applications. In this webinar, the underlying concepts of such systems and the current state-of-the-art development of these advancements will be presented.

Date: Tuesday, March 8, 2022 (17 Esfand 1400)

Time: 20:30-21:30 (Iran Standard Time: +3:30 GMT)

12:00-13:00 (Eastern Standard Time: -5:00 GMT)